What Is a Transformer Model in AI

Introduction

If you’ve ever wondered how modern AI tools from ChatGPT to Google Gemini understand language, write essays, translate text, analyze images, or even generate code, the answer traces back to one groundbreaking innovation: the transformer model. Introduced by Google researchers in 2017, the transformer architecture didn’t just improve AI it rewrote the entire field. Today, transformers power almost every state-of-the-art system in natural language processing (NLP), computer vision, speech recognition, and even scientific research.

For students entering tech, computer science, or AI-related fields, understanding transformer models is essential. They are the foundation of large language models (LLMs), multimodal AI, and modern generative tools. In this article, we’ll break down what transformer models are, why they matter, how they work under the hood, and where they’re used in the real world. We’ll walk through the concepts step-by-step, using clear analogies, expert insights, and real examples so you feel confident explaining the topic yourself.

What Is a Transformer Model in AI?

A transformer is a type of neural network architecture built to process sequential data (like text), but unlike earlier models, it can handle long sentences, paragraphs, or documents much more effectively. Transformers rely heavily on a mechanism called self-attention, which allows the model to look at all words in a sentence at once rather than one at a time.

This innovation makes transformers:

-

Faster to train

-

Better at understanding context

-

More accurate across long sequences

-

Scalable to billions (or trillions) of parameters

If earlier AI models were bicycles, transformers are high-speed trains.

How Transformers Changed AI Forever

Before transformers, NLP relied mainly on:

-

RNNs (Recurrent Neural Networks)

-

LSTMs (Long Short-Term Memory networks)

-

GRUs (Gated Recurrent Units)

These models processed text sequentially, meaning they read one word at a time. This caused several problems:

-

Slow training

-

Difficulty handling long-range dependencies

-

Vanishing/exploding gradients

-

Limited scalability

Transformers fixed all of that by enabling parallel processing and long-context understanding through attention.

To quote Google’s research paper, transformers “enable significantly more parallelization and reduce training times by orders of magnitude.”

How Transformer Models Work (Simplified for Students)

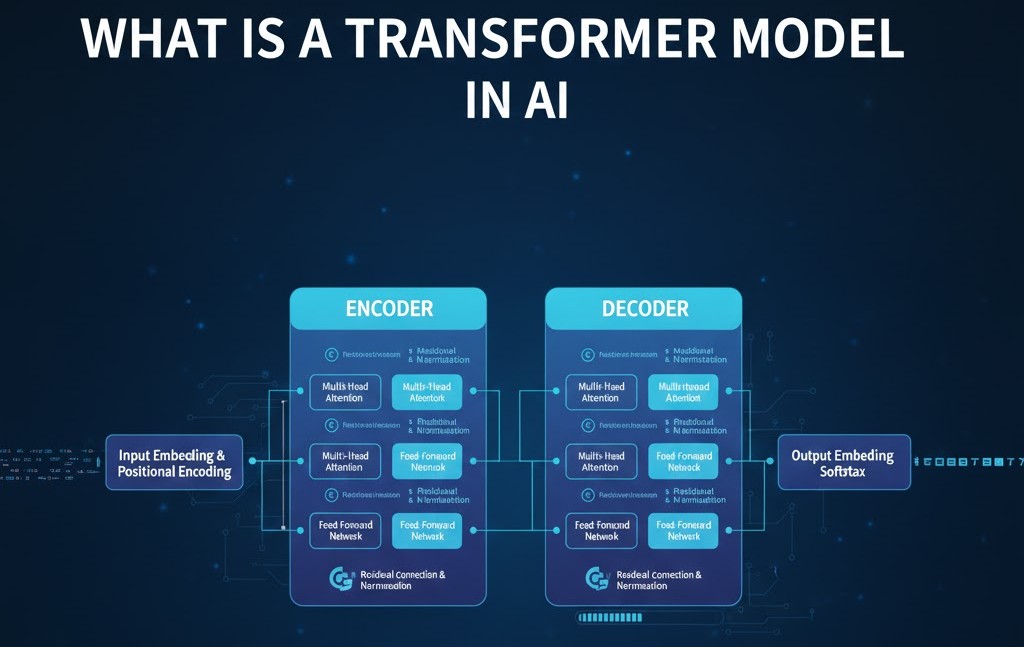

Transformers contain two major components:

-

Encoder

-

Decoder

Some models use both (e.g., T5, BERT-to-BERT systems).

Some use only the encoder (e.g., BERT).

Some use only the decoder (e.g., GPT).

Let’s break down how the pieces fit together.

1. Input Embeddings: Turning Words Into Numbers

AI models cannot process text directly. They convert words into numerical vectors called embeddings.

For example:

“AI is amazing” → [0.12, -0.43, 0.88, …]

These vectors capture meaning, relationships, and context. In transformers, embeddings come with positional encoding because transformers themselves don’t understand word order by default.

2. Positional Encoding: Teaching the Model Word Order

Transformers process all words in parallel, so positional encoding acts like giving each word a coordinate in space.

Example:

-

“I love apples”

-

“Apples love I”

Same words, totally different meanings.

Positional encoding ensures the model understands the difference.

3. Self-Attention: The Heart of the Transformer

Self-attention is what makes transformers powerful.

It answers:

“Which words should I pay attention to when understanding this word?”

Example:

In the sentence “The cat that scratched the dog ran away,” the word “ran” should attend to “cat,” not “dog.”

Self-attention lets the model make these distinctions by assigning weights to relationships between words.

Why Self-Attention Matters

It allows the model to:

-

Understand long phrases

-

Follow complex grammar

-

Capture meaning across multiple sentences

-

Work with speed and parallelism

This is the core mechanism behind ChatGPT-like performance.

4. Multi-Head Attention: Looking at Context from Different Angles

Instead of one attention operation, transformers use several — called heads — each focusing on different relationships.

One head may analyze:

-

Grammar

Another may analyze:

-

Long-term context

Another may focus on:

-

Named entities (e.g., people, places)

These perspectives get combined to build a deeper understanding of text.

5. Feed-Forward Networks: Processing the Attention Output

After attention, the transformer passes outputs into small neural networks (FFNs) to refine the meaning further.

6. Layer Normalization and Residual Connections

These help:

-

Stabilize training

-

Improve model performance

-

Avoid vanishing gradients

They allow transformers to scale reliably to 10B, 100B, or even 1T+ parameters.

Encoder vs Decoder: What’s the Difference?

The Encoder: Understanding the Input

The encoder reads text and builds a contextual representation.

Think of it as the reader.

Used in models like:

-

BERT

-

RoBERTa

-

DistilBERT

Great for:

-

Classification

-

Sentiment analysis

-

SEO topic classification

-

Named entity recognition

The Decoder: Generating Output

The decoder predicts the next word or token.

Used in models like:

-

GPT-3

-

GPT-4

-

Llama

-

Claude

Perfect for:

-

Writing

-

Chatbots

-

Story generation

-

Translation

The Full Transformer Architecture: Encoder + Decoder

Some systems use both for more complex tasks.

Example models:

-

T5 (Text-to-Text Transfer Transformer)

-

BART

These are excellent for:

-

Summarization

-

Paraphrasing

-

Question answering

Real-World Examples of Transformers in Action

Transformers power systems across industries:

1. Search Engines (Google, Bing)

Transformers help understand search queries more like humans.

Google’s BERT update improved 10% of English queries instantly.

2. Chatbots and Virtual Assistants

Products like:

-

ChatGPT

-

Gemini

-

Copilot

-

Amazon Q

These rely on decoder-based transformers to generate natural language.

3. Healthcare and Pharma

Transformers analyze:

-

Medical images

-

Protein structures

-

Clinical notes

DeepMind’s AlphaFold (transformer-based) revolutionized protein prediction.

4. Education Tools

Grammarly, educational apps, AI tutors, and plagiarism detectors all leverage transformers to understand student writing.

5. Business and Productivity Apps

Transformers run:

-

Meeting transcription

-

Email drafting

-

Data extraction

-

Sentiment analysis

They’re the backbone of modern workplace AI.

Why Transformers Became the Standard in AI

1. Scalability

Transformers scale effortlessly to massive datasets.

This made the LLM revolution possible.

2. Parallel Processing

Multiple GPUs and TPUs can handle training efficiently.

3. Long-Context Understanding

LLMs today can process 100K+ tokens because of transformers.

4. Multimodal Capabilities

Transformers can handle:

-

Text

-

Images

-

Audio

-

Video

-

Code

5. State-of-the-Art Accuracy

Every major AI benchmark is currently dominated by transformer-based models.

Common Terms Students Should Know

Here’s a quick glossary:

-

Token smallest unit of text the model reads

-

Embedding numeric representation of a word

-

Attention mechanism to focus on relevant information

-

Parameters internal values the model learns

-

Context Window how much text the model can process at once

-

Fine-tuning specializing a model on a specific task

Advantages and Limitations of Transformers

Advantages

-

High accuracy

-

Faster training

-

Better long-context handling

-

Reasoning abilities

-

Multimodal flexibility

Limitations

Even transformers aren’t perfect:

-

Expensive to train

-

Require large amounts of data

-

Can hallucinate incorrect facts

-

Energy-intensive

-

Need careful alignment and safety measures

Understanding these challenges helps students think critically about AI.

Future of Transformer Models: What Students Should Expect

According to leading AI labs and academic researchers, the next evolution of transformer models includes:

-

Longer-context models (processing full textbooks)

-

Multimodal reasoning (text + audio + image + sensors)

-

Agentic behavior with planning abilities

-

Smaller, efficient transformers for edge devices

-

Hybrid architectures combining transformers with other neural models

Transformers will remain central, but they will become more:

-

Efficient

-

Reliable

-

Interpretable

-

Environmentally sustainable

Conclusion

The transformer model isn’t just another AI innovation it’s the foundation of modern artificial intelligence. Whether you’re a student studying computer science, a beginner interested in AI, or someone planning a tech career, understanding transformers gives you a competitive edge. From self-attention to multi-head mechanisms, from encoders to decoders, these architectures power every major generative AI system today.

As AI continues to evolve, transformers will remain at the heart of breakthroughs in search, education, medicine, language technology, and scientific research. Now that you understand how they work, you’re better prepared to explore deeper topics like fine-tuning, LLM architecture, and multimodal AI.

The future belongs to those who understand the tools shaping it and transformers are one of the most important tools of all.

FAQs (People Also Ask Style)

1. Why are transformer models better than RNNs or LSTMs?

Because they use self-attention, allowing parallel processing and better long-term context understanding.

2. Are transformers only used for text?

No. They’re used for images, audio, video, protein folding, and multimodal AI.

3. What’s the difference between GPT and BERT?

BERT is encoder-only (understands text), while GPT is decoder-only (generates text).

4. Do transformers require a lot of computing power?

Large models do, but smaller and optimized versions can run on laptops or phones.

5. Are transformers the future of AI?

Most experts believe so, though hybrid architectures may emerge alongside them.

Tags :

No Tags

0 Comments